This nails the qualitative essence of ChatGPT; I’ve been tinkering with it, pushing at the boundaries and exploring what it does and doesn’t do well.

It’s profoundly bland to the point of triviality, but it’s great at mimicking rhetorical structure.

This overlaps with my professional interests in interesting ways — Mark Baker’s peerless 2018 book ‘Structured Writing: Rhetoric and Process’ is a deep exploration of the ways “structured content” is often the practice of codifying rhetoric. https://xmlpress.net/publications/structured-writing/

We’re used to talking about structured content as “form fields” or “XML tags” or “APIs,” but below the technical structure, the most important part is the identification and understanding of the semantic structure — the unique forms of particular kinds of messages

An essay is structurally different than a speech, even if both are flattened to plaintext and pasted into a body field. As human listeners we can perceive those differences and they shape the effectiveness of the content in different contexts.

Some examples:

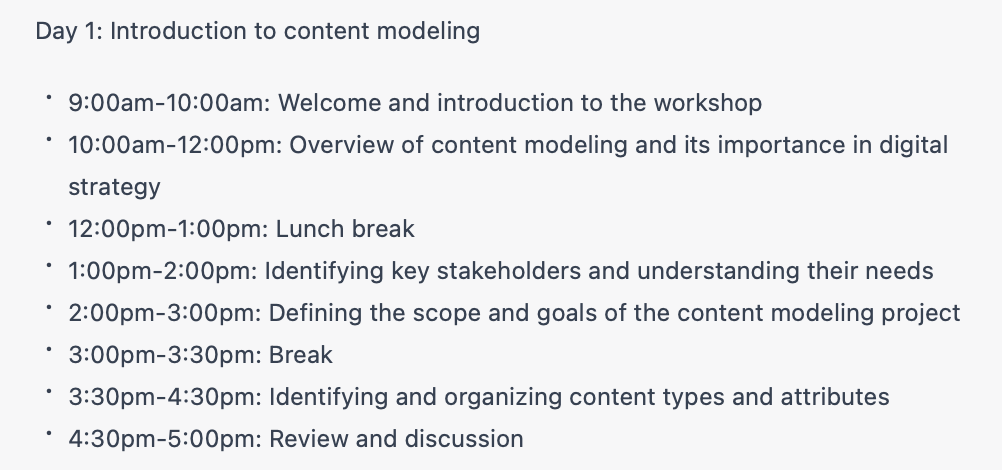

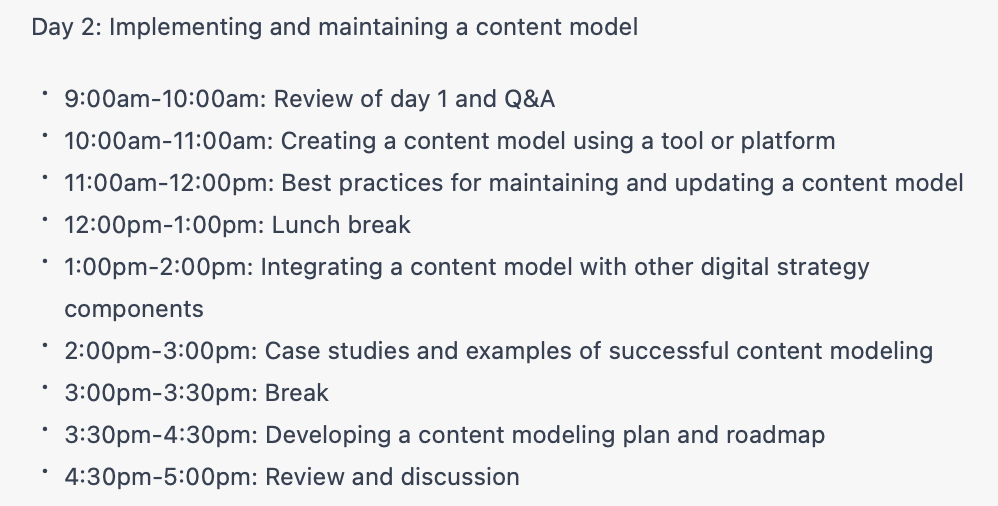

> Write a schedule for a content modeling workshop taught by digital strategy experts

,

,

,

,

The thing is, that’s not a bad outline. It’s super bland, topically, but it has decent pacing, nice progression of concepts from intros and exercises on the first day, and ‘where to go from here’ wrap-up on the last session of day 3.

What it gets right is exactly what we try to get right when building assistive structures for content authors — paving the best paths, providing defaults that shape things into a form we know works and is common across most instances of a particular type of message.

Along the same lines, asking it to ‘write a haiku about peanut butter’ and ‘write a limerick about peanut butter’ resulted in… well, bad poetry. Really bad.

But it nailed haiku-ness versus limerick-ness in a way that highlights this rhetorical structure thing.

As @tcarmody noted, it’s a bad writer but it gets genre. He pointed me to this thread, discussing GPT-3 attempts at math proofs:

The Google Copilot project — using GPT-3 to generate code from descriptive prompts — is another example. It’s roughly as good as a new developer who scours StackOverflow and copies snippets without getting how the software works.