Morning, friends! Last week I posted about Spidergram, the web analysis tool @autogram_is just released on GitHub. Over the holidays, we’ll be doing a lazy, weekdays-only advent calendar — showing interesting tricks and explaining the ideas behind it. https://github.com/autogram-is/spidergram

Today’s a little meta: Just setting up and running through a simple node.js project that crawls @beep’s web site. In subsequent days we’ll talk about extracting custom data, doing statistical analysis, and more, but for now? Let’s get it ~workin’~.

First, setup: we Spidergram’s storage around @arangodb, a “multi-modal” database that stores complex documents AND knowledge-graph style relationships in a single db. There are downloadable installers for most platforms on their site: https://www.arangodb.com/download-major/

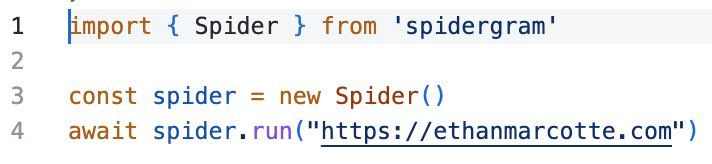

With that out of the way, I popped open a new node.js project, ran npm install spidergram to pull in Spidergram and its dependencies… then dropped the following code into the index.js file:

Once that’s done, npm run start kicks off the spidering process — visiting Ethan’s home page and following all the links. Every page is now stored in the Arango database, every link on the site is recorded, every relationship is tracked, every downloadable PDF cataloged.

Tomorrow we’ll get into how that data can be queried, sliced, diced, and processed — to do things like identifying difficult-to-reach pages, plotting the way the site’s content publishing patterns have changed over time, and more.